NVIDIA GeForce RTX 3080

GeForce RTX 3080 at $699.

Faster than the GeForce RTX 2080 Ti in many games. Almost double the speed of the RTX 2080 in some games.

Seriously, that’s all you really need to know.

When NVIDIA announced the three cards a few weeks ago, I wasn’t expecting to be floored by what they talked about. Boy, was I surprised. It takes a lot to get me excited these days about hardware, having done this for a long, long time. But NVIDIA did just that. They got me really, really excited again.

The flagship for their new line is the GeForce RTX 3080. It’s the card that high-end gamers should get. NVIDIA said it can run almost twice as fast as the GeForce RTX 2080. Even I was skeptical about this, but there were a few early videos from sites showing massive improvements over Turing. So did NVIDIA truly release an architecture that delivers the promise of almost 2x performance over their top of the line previous gen card?

Let’s start with the hardware. The GeForce RTX 3080 has computational powers of 30 Shader-TFLOPS, 58 RT-TFLOPS, and 238 Tensor-TFLOPS. But what is in the hardware to deliver that? Let’s compare it to the RTX 2080 Ti and RTX 2080.

|

GeForce RTX 3080 |

GeForce RTX 2080 Ti |

GeForce RTX 2080 |

|

|

SM |

68 |

68 |

46 |

|

CUDA Cores |

8074 |

4352 |

2944 |

|

Tensor Cores |

272 (3rd gen) |

544 (2nd gen) |

368 (2nd gen) |

|

RT Cores |

68 (2nd gen) |

68 (1st gen) |

46 (1st gen) |

|

Texture Units |

272 |

272 |

184 |

|

ROPs |

96 |

88 |

64 |

|

GPU Boost |

1710 MHz |

1635 MHz |

1800 MHz |

|

Memory Clock |

9500 MHz |

7000 MHz |

7000 MHz |

While the RTX 3080 has less Tensor cores than previous cards in the table, the Tensor cores are using newer technology so it is more performant than the last generation. By raw numbers, NVIDIA is touting 2.7x performance over the previous generation with 238 Tensor-TFLOPs compared to 89 Tensor-TLOPs. It has almost double the CUDA cores as the RTX 2080 Ti, along with a higher GPU boost and higher memory clock speed as well.

In the past, Founder’s Editions were based on reference designs, but NVIDIA this time around did some more customization, so it’s not really based on that. The PCB actually doesn’t span the entire length with the back area with the fan being entirely open for air to pass through. For those thinking of watercooling this card, you’ll have to make sure the block fits this card specifically, as seeing cards from other AIBs proves the design is totally different.

Also, they partnered with Samsung instead of TSMC and built off of an 8nm process. Samsung has done some quality stuff and I own a lot of Samsung phones and watches. So seeing NVIDIA partner with Samsung on this card was a rather interesting bit of news for the RTX 3080.

Memory wise, a new type of memory is being used with the RTX 3080. Dubbed G6X, the improvement on this memory is that on each cycle, there can be four values transmitted versus two in the previous G6 memory. That means double the bandwidth. The GeForce RTX 3080’s default configuration will be 10GB of G6X memory.

NVIDIA is now requiring a 12-volt 12-pin connector for the RTX 3080, which NVIDIA has included an adapter for. For those with a modular power supply, you might be able to just grab a new cable to connect your PSU to the card without an adapter from your PSU's maker. The connector on the card actually extends from the PCB at a 45-degree angle and the design reduces the amount of cables traditionally with flagship cards from two to now one. The RTX 2080 Ti used two 8-pin connectors and NVIDIA included an adapter to turn those two 8-pin connectors into one 12-pin connector. So you might be wondering why the adapter is so short as well as why the plug is sitting on the card halfway in instead of at the end. Well as mentioned before, the design of the PCB ends before the fan on the end so that was near the end of the board. Now, could they have made an extension and put the connector on the end? Maybe, but they had their reasons to not put an extension through the rear fan and heat sink area.

Another topic of discussion was why the adapter was so short with a split. I thought a little about this and the only thing I can come up with was so you could route the two 8-pin power cables on either side of the card and not hide the glowing GeForce RTX logo. Manufacturers of power supplies will be coming out with cables for their modular PSUs to accommodate the new power plug, but from what I’ve seen if you route it along the edge of the video card, it will block that logo. I guess you can route it through the back or front of it but then you have a chance of blocking air flow through that rear fan. For those that care about aesthetics, it’s certainly a small dilemma.

TDP on the RTX 3080 is 320W, which is a good bump from, say, the RTX 2080 Ti’s 260W and the RTX 2080’s 225W. So, you might need a little heftier power supply when you upgrade. I had some issues with my power supply whereby the system would shut down completely when gaming on the RTX 3080 but was fine on the RX 2080 Ti. NVIDIA was kind enough to send me a replacement power supply at the same wattage to continue testing and that fixed the problem.

One of my main complaints of the Founder’s Edition of the GeForce RTX 2080 Ti was the fan. My god, did it get loud when under load. I thought my computer was going to take off when I was gaming and I was seriously considering getting an AIO to replace the card’s shroud setup. NVIDIA has a new design for cooling the RTX 3080 and, yes, it is a good bit quieter. It’s a 2-slot design, so, width-wise, it won’t take up more room if replacing a 2-slot card.

When gaming with the RTX 2080 Ti, I saw my temps max out at around 81C, but you’d hear the fans ramp up significantly until the temps dropped a few degrees whereby the fans slowed down to a more bearable audio level. That is until the temps raised again and we got this vicious cycle of loud fan noise for a few minutes before the fans cooled the card enough to ramp down again.

With the flow-through design, the RTX 3080 has a front-bottom fan that pulls in air and expels it out the back of the large bracket vent. A rear-top fan also pulls air from underneath and cools the rear components while expelling the hot air over where the CPU sits. Now, I don’t have a traditional fan and heatsink CPU cooler so I couldn’t say whether this design increased the temperature on the CPU to any meaningful level, but those with AIOs or water coolers shouldn’t see anything different. If you’re going to vertically mount it, well now you have warm air expelling onto your motherboard, which could be where the chipset or NVMe is located and that could have some effect on the temperature of the drives.

What’s crazy is on idle or doing work like for me running an instance of Visual Studio, SQL Enterprise Management, remote desktop, Slack, and a browser, the fans don’t run at all. I kept staring at it waiting for the fans to spin up—but there was nothing. Before anything is running, the RTX 3080 would sit at a clock speed of 210MHz and memory speed of 405MHz. Temperatures hovered around 41C. As I’m typing this in Google Docs, I’d see an occasional jump to 250MHz maybe, but for the most part it stuck at the 210MHz and 405Mhz for GPU and memory. When watching YouTube at 1440P, it would start 1710MHz and 9501MHz for both and then as the video progressed it would jump at a peak of 480Hz and 810Hz for GPU and memory while dipping to 330MHz and 405MHz for GPU and memory. It would bounce around between the two values depending on the scene. The heat sink design of the RTX 3080 allowed for the card to be cooled without having the internal fans run provided you have a decent enough airflow through the case. I verified with NVIDIA and they said they implemented a fan stop mechanism if the card is under a certain temperature and power since the design can keep the card cool without the fans spinning basically making the card run silent. That’s pretty cool.

While on load the card also reached levels of around 80C when playing the same games according to MSI Afterburner, the sound was a lot better. I don’t have the tools to measure the dBA of the cards, but just on basic experience using my ears, it’s much improved over the RTX 2080 Ti. I still hear it when it ramps up, but it’s a lot smoother and it’s a lot less audible and jarring. My computer doesn’t sound like a jet engine anymore and the ramp up and ramp down transitions are not annoying.

For those that want to show off the card, I feel the design of the RTX 3080 Founder’s Edition is a nice step up from the RTX 2080 Ti Founder’s Edition card. That’s not to say I didn’t like the two fan metallic shroud approach of that card. The RTX 3080 shows off the heat sink fins proudly with a satisfying symmetrical pattern on the bottom and a solid covering for three-fourths of the card on the top with an RTX 3080 branding. When turned on, the GeForce RTX logo lights up with a warm white LED and there’s also a white LED on the angle edges of the shroud. The lighting effects aren’t overly exaggerated and exhibit a minimalistic approach, which I appreciate as one of my pet peeves is annoyingly loud computer lighting setups. For now, you can’t change the color and I don’t even know if it’s a multicolored LED on the card, but who knows for the future.

You won’t see any screws as they are all covered up making it a pretty clean design. The pewter accent compliments the black fins and covers well. It’s designed to be efficient in cooling and pleasing to the eye and I think NVIDIA did a pretty good job here in achieving both.

As mentioned before, width wise the card will fit a 2-slot configuration nicely. It is a little longer than the RTX 2080 Ti and RTX 2080 though so you’ll have to take the increased length in consideration when putting this in a case of your choosing.

On the bracket for the Founder’s Edition are three Display ports and one HDMI 2.1 port all lined up in a row. Whereas the Founder’s Edition RTX 2080 Ti had the ports spread across both brackets, I like the single file design of the connectors making it easy to connect my multiple monitors and VR headsets.

HDMI 2.1 affords 8K 60FPS HDR support making this card future proof for displays for consumers in the coming years. DisplayPort 1.4a doesn't have as much bandwidth as HDMI 2.1 does with 32.4 Gbps compared to 48 Gbps. Speaking of HDR, the RTX 3080 supports 10bit and 12bit HDR. For those of us who dabble in home theater PCs, this is a nice feature if you ever decide to upgrade your TV.

VirtualLink seems to be dead as there’s no USB-C port on the card. The port was never fully realized and the one product that I wanted to use with it, the Valve Index, had its VirtualLink box cancelled because of reliability issues. That said, wireless is the future of VR, and while having a single cable would have been nice in the interim, it just wasn’t meant to be.

Also missing is the SLI connector so we might be looking at two technologies—one long-standing in the graphics world—end with the RTX 30 series. I was never a fan of SLI as it didn’t show the gains on a majority of the tiles that would warrant spending double on video cards, so I’m pretty satisfied with it not being included.

The entire design of the GeForce RTX 3080 makes it one heavy and solid card. There’s a great deal of heft to it when you try and pick it up. You could definitely use it as a deadly weapon if you hurl this at someone. Please don’t throw this card at anyone.

OK, let’s get to how games do on this beast. I game on an ultrawide screen monitor. It’s my go-to because I love the extra real estate that it affords me. It’s also great for productivity. So while it’s not a 4K display, it’s still pushing roughly 4.9 million pixels. My test system consists of:

Intel i7-9700K

32GB DDR-3200 G-Skill Ripjaw RAM

MSI MPG Z390 Gaming Pro Carbon

1TB Western Digital NVMe

Ultrawide 3440x1440 100Hz monitor

Instead of putting this in a test bench, I have all the components in an NZXT H510 case. This would be a more accurate representation of people’s setup over that of having it on a test bench. Installation was pretty easy and the card fit right in there with my NZXT AIO cooler with front-mounted radiator and fan setup. I mounted the card horizontally even though I did have the option to do a vertical mount. I just don’t trust my riser cable as I think I’ve had random crashes when using it in a previous card.

All tests were run three times with an average of the three runs shown in the graph. When no built in benchmark was available, I tried to run through a level that I could confidently replicate consistently. For all the settings used, you can click on the images below in the gallery to see what was set.

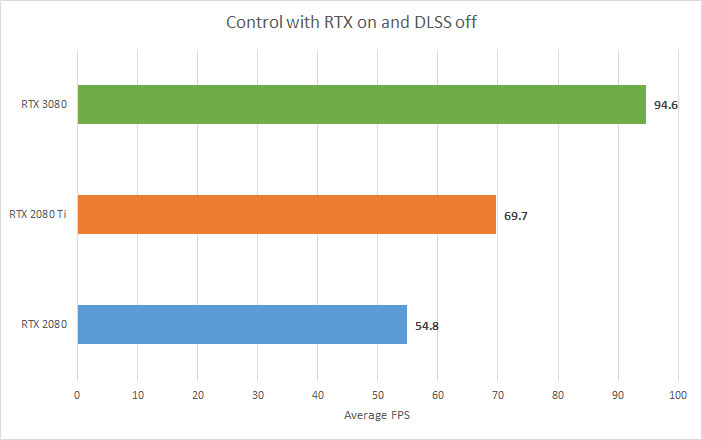

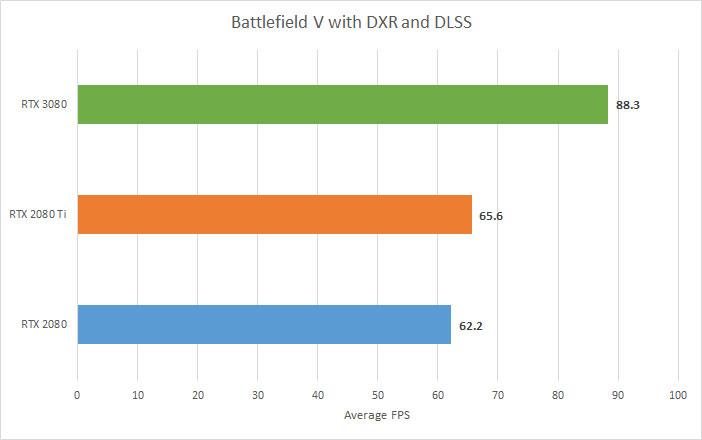

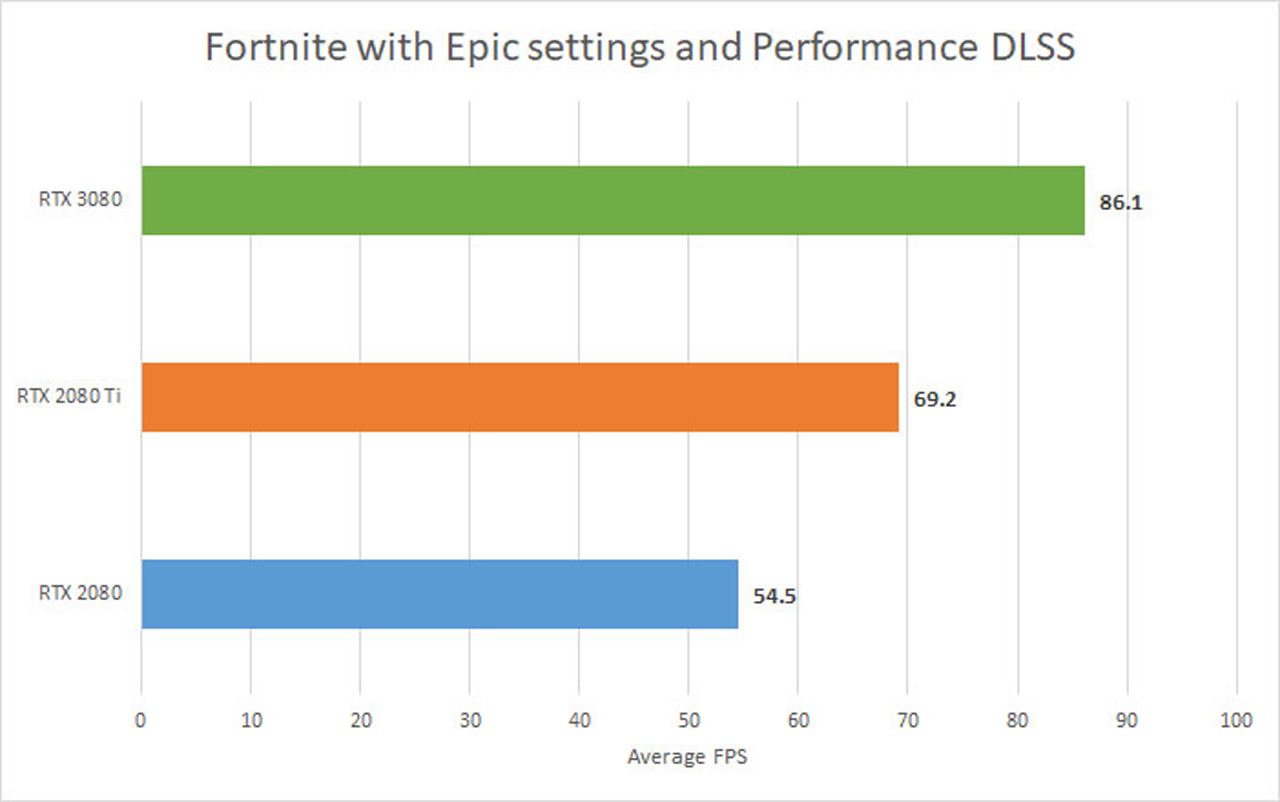

For games like Minecraft, Control and Fortnite, I just ran the tests with RTX features enabled. Fortnite just came out with the patch to enable RTX features and I used the RTX Treasure Run map to produce these numbers. Some, as marked, were run with DLSS turned off and then on.

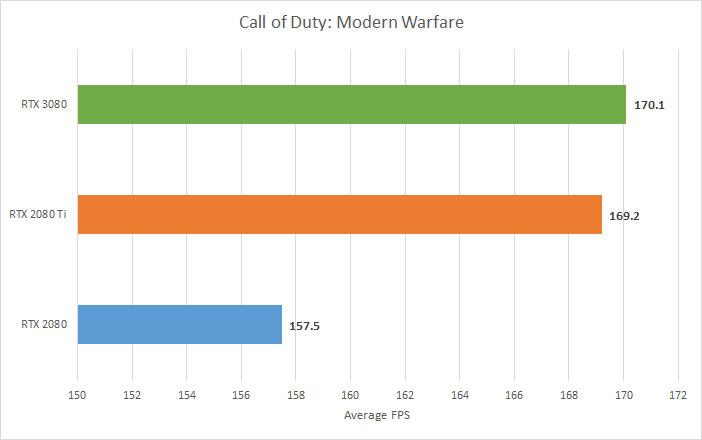

Now the next two games, Call of Duty: Modern Warfare and Microsoft Flight Simulator 2020 showed pretty much zero improvement over the RTX 2080 Ti. I was checking MSI Afterburner and it showed my GPU was only using about 50% or so in both games. Scouring the internet, it seems I'm not the only one seeing this. So, for now, it looks like these two are CPU-bound at this resolution. I do know the Microsoft Flight Simulator 2020 is still using DirectX 11 so we'll see if it improves when the game gets updated in the future with DirectX 12.

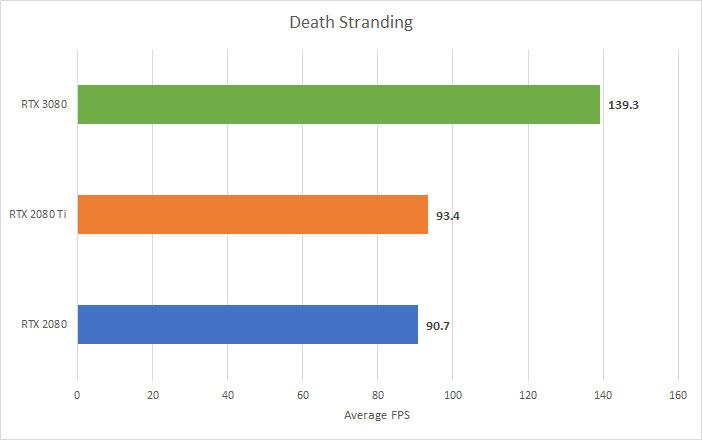

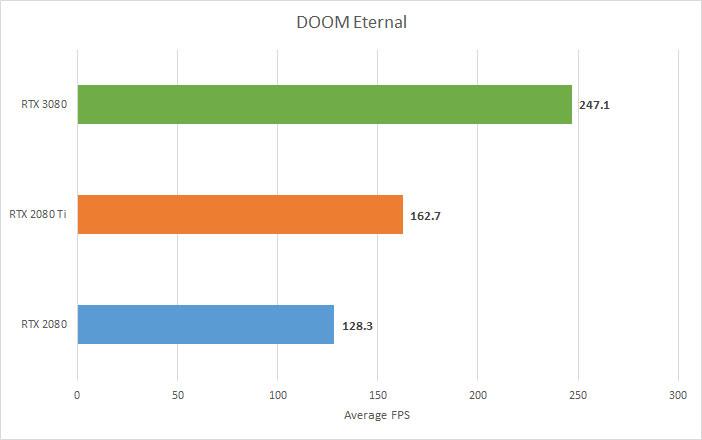

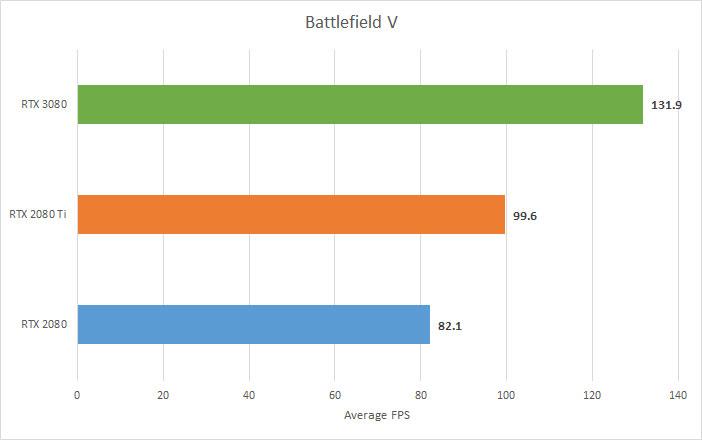

OK, it doesn’t get double the performance of the RTX 2080 on all games, but in some cases it does! Minecraft with RTX on and DOOM Eternal showed the most improvement with 106% and 93% respectively. We're talking, on average, of a 63% improvement over the RTX 2080 and about a 40% improvement over the RTX 2080 Ti. That’s an incredible feat to create a card that has such a large increase in performance across the board where the games aren’t CPU-bound on my system. I’ll take that all day with the next generation of cards that come down the pipe.

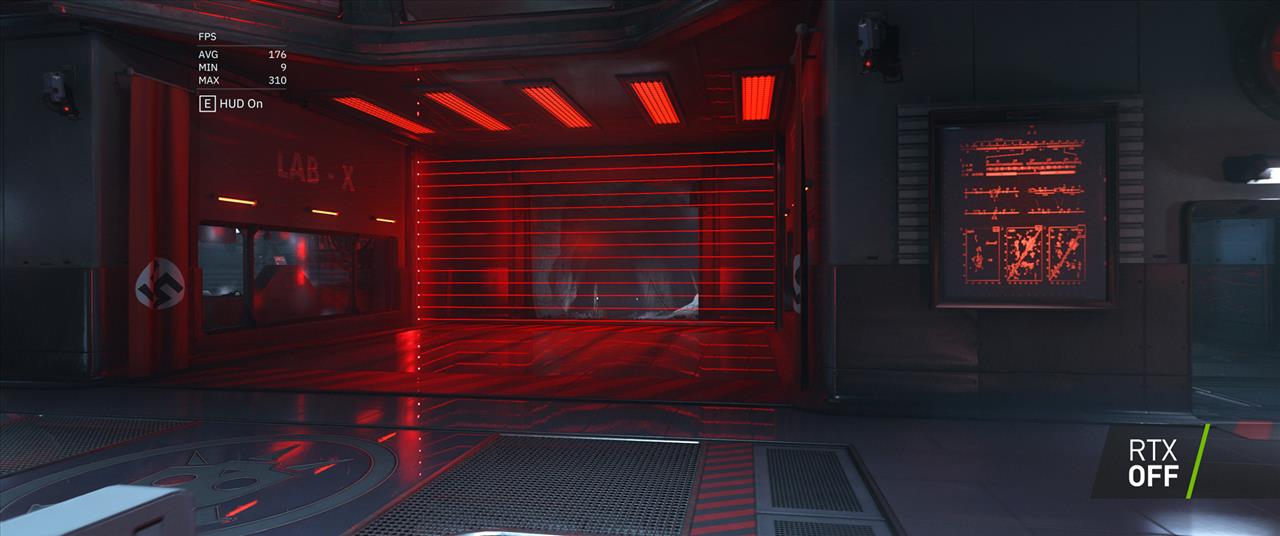

Enabling RTX features allows for greater visual experiences like in Minecraft and Control, and certainly what I’ll be doing from now on with games that support it. On Wolfenstein: Youngblood it produced a lot more accurate reflections of lasers on the ground versus RTX off. With RTX off, the laser had a weird bend to them in the reflection instead of being straight. More accurate reflections and lighting can make for a much better visual gaming experience, and with the RTX 3080, you have the horsepower to run it at high resolutions. Even with my RTX 2080 Ti, I disabled RTX if a game supported it, but now it looks like I’ll be defaulting with that on. Enabling DLSS takes the performance even further, but some games might not look as well with it on in certain situations. For the most part, DLSS does a really great job at upscaling the picture with little to no visual degradation or artifacts. But like I said, you might run into it here and there were the image isn't as good. Combine ray tracing with DLSS and you could get awesome image quality and great performance.

Compared to the RTX 2080 Ti, the gap in games isn't as wide but it’s still there. Red Dead Redemption 2 stayed over the 60 FPS a lot more in the built in benchmark with an average that makes the game look gorgeous and fast even with most settings set at Ultra or High. That’s something the RTX 2080 Ti can’t do. Games like Microsoft Flight Simulator 2020 and Call of Duty: Modern Warfare saw negligible improvement, but I’m hoping updates to Microsoft Flight Simulator 2020 will improve on that as MSI Afterburner reported it was only using 50% of the GPU when I was flying through Las Vegas.

So now that we’ve gotten game performances out of the way, what about some other features that NVIDIA has built into the architecture?

Streamers and YouTube video game creators can now capture up to 8K 30FPS of their gameplay sessions. That means higher resolution game video for their audiences to watch. HDR is also available and that is on all resolutions. For me, HDR is a lot more visually appealing than resolution, so having the ability to record in HDR on all resolutions for sharing gaming video is a bigger grab here.

For those starting to stream or wanting to maybe streamline their setup, NVIDIA Broadcast will help deliver higher quality audio and video with the help of its Tensor Cores. I’ve used RTX Voice and it does a really good job of cancelling outside noise while focusing on your voice when talking. NVIDIA demonstrated the ability to have a green screen-like effect by replacing the background around the person with something else. I tried NVIDIA Broadcast and saw it did a solid at background removal, replacement, and blurring. I was able to do a few things to cause some areas to have artifacting. Even areas that weren't near me lost some of the background removal if I positioned my arm a certain way. The camera center option did sometimes lag a little to keep up, but with more improvements in the software, I think NVIDIA Broadcast could be a nice little piece of software for up and coming streamers who can’t afford an entire setup yet, but want those neat effects that they can provide.

I’m always looking to improve my home NAS with my kids’ movies as well as my collection. Currently, all my 4K videos are encoded in HEVC because all my devices support hardware decoding for HEVC. But I always welcome more efficient codecs and that’s where VP1 comes in. VP1 looks to be the next generation codec for high resolution video, especially the 8K kind. But, decoding can be pretty CPU intensive. Ampere allows for VP1 hardware decoding so just like the decision to put a HDMI 2.1 connector on board, the RTX 3080 is ready for decoding VP1 thus future proofing the card for media consumption purposes. I’ve always used NVIDIA cards in my home theater PCs because of decisions like this and I can’t wait to see small form factor Ampere cards come out that will fit nicely in HTPCs.

So it comes down to this. NVIDIA’s GeForce RTX 3080 is a huge winner. It delivers on the performance at a price that’s unbeatable. If you would have told me that in two years after the 2080 Ti that NVIDIA would launch a card that would beat the performance this much at nearly half the price, I would have laughed at you. Yet here it is. Now, not all games will get that performance boost, but at the very least it’ll be faster than a card that’s a lot more expensive. And yes, this card makes RTX viable where I will be using it on the games I play at the higher resolution.

It does draw a not insignificant amount of power over the RTX 2080 and RTX 2080 Ti so you’ll have to take that into consideration. As in my tests, my old 750W power supply had no issues with the RTX 2080 Ti, but the RTX 3080 would shut my system down after about 10 minutes of gaming. Changing to another 750W power supply solved that problem, but you might want to think about that if you’re considering purchasing the RTX 3080.

Hopefully, the supply will be there from various OEMs and NVIDIA to meet the demand because I have a feeling a lot of people are going to be lined up to purchase this one. It’s a worthy upgrade even over the high end Turing cards that are currently available. That's not to say you should buy it if you own an RTX 2080 Ti. It's still a blazingly fast card with all the RTX goodies in place. There's nothing wrong with it and you should still feel pretty happy with it if you purchased it recently. If you don't have an RTX card though and will be playing on 1440P and higher or an ultrawide, this is a great upgrade.

There’s not much to be happy about in 2020, but NVIDIA has come out and provided something to really be excited for. Ampere is here and, while it’s not quite 2x performance over the RTX 2080 in all situations, it’s a damn fast card at a price that’s pleasantly surprising.

$699. Incredible performance. RTX features can now be turned on without fear of crushing game performance. It's a must buy for gaming enthusiasts.

Rating: 9.5 Excellent

* The product in this article was sent to us by the developer/company.

About Author

I've been reviewing products since 1997 and started out at Gaming Nexus. As one of the original writers, I was tapped to do action games and hardware. Nowadays, I work with a great group of folks on here to bring to you news and reviews on all things PC and consoles.

As for what I enjoy, I love action and survival games. I'm more of a PC gamer now than I used to be, but still enjoy the occasional console fair. Lately, I've been really playing a ton of retro games after building an arcade cabinet for myself and the kids. There's some old games I love to revisit and the cabinet really does a great job at bringing back that nostalgic feeling of going to the arcade.

View Profile